Google’s Robotic Transformer 2: More Than Meets the Eye

Google’s DeepMind vision-language-action model, which transforms web knowledge into robotic control, recently landed on Earth with a truly cutting-edge machine learning algorithm.

New Vision for Vision Language Model Software

Specifically, Google DeepMind's Robotic Transformer 2 (RT2) is an evolution of vision language model (VLM) software. Trained on images from the web, RT2 software employs robotics datasets to manage low-level robotics control. Traditionally, VLMs have been used to combine inputs from both visual and natural language text datasets to accomplish more complex tasks. Of course, ChatGTP is at the front of this trend.

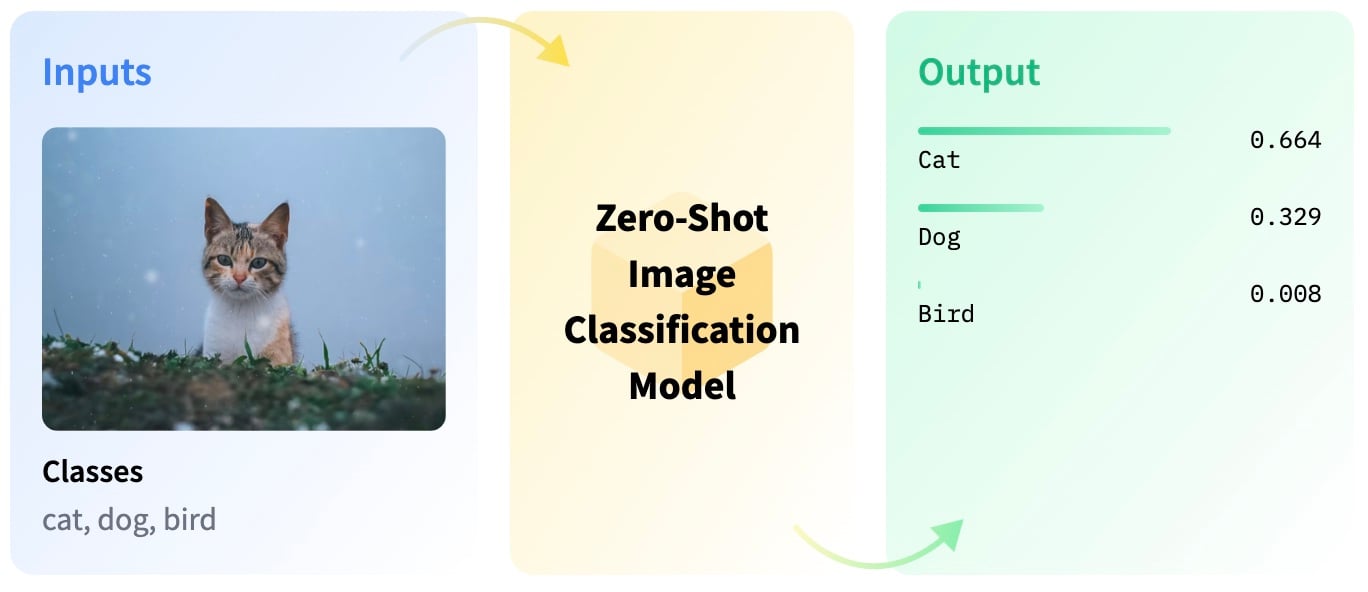

One example of a VLM is the zero-shot image classification, a model introduced in 2009 to make machine learning more “human.” Ekin Tiu offered an intuitive overview of how a model can recognize what it hasn’t seen in a recent submission to Towards Data Science.

Vision learning modules are tasked with returning a text-based classification from a list of potential categories given an image it has not seen before in its training data set. The image below does a good job of summarizing how the model combines text and visual data.

An example of a VLM. Image used courtesy of huggingface

What Makes RT2 Ready for Optimus Prime ... Inc.

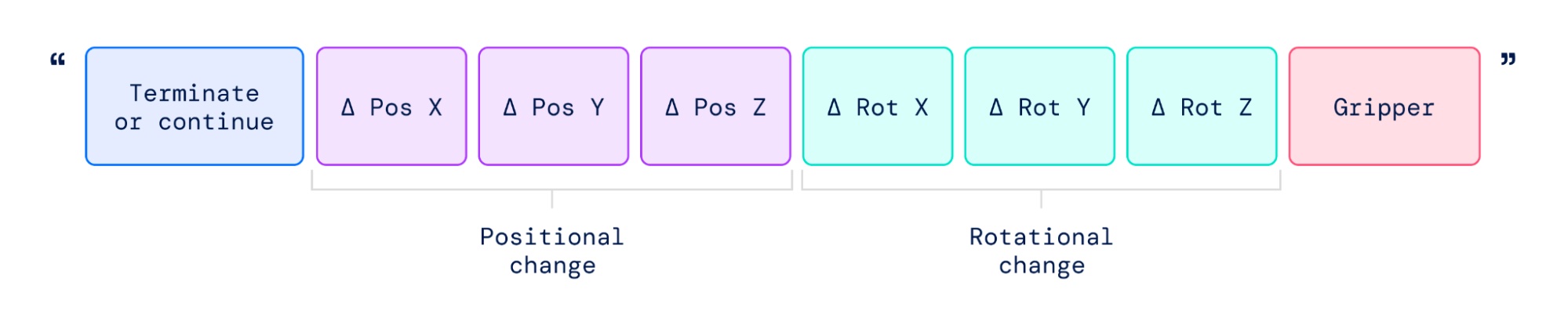

What makes the RT2 model prime enough for engineers at “Optimus Prime Machines Inc.?” It is in the way Google researchers figured out how to “transform” data into string or text-based outputs. These string outputs can then be interpreted by natural language tokenizers into robot action.

Figure 2. Robot trajectory output in the form of a string. Image used courtesy of Google

What Problem Does RT2's VLM Solve?

Google researchers identified a gap in how current VLMs were being applied in the robotic space. They note that current methods and approaches tend to focus on high-level robotic theory such as strategic state machine models. This leaves a void in the lower-level execution of robotic action, where the majority of control engineers execute work. Thus, Google is attempting to bring the power and benefits of VLMs down into the control engineers' domain of programming robotics.

Figure 3. Applications of the RT2 model. Image used courtesy of Google

An Exciting Application of Machine Learning

The RT2 model is one of the more exciting applications of machine learning in the industrial automation space. Because the RT2 software and its underpinning machine learning algorithm is a somewhat new technology, its true potential has yet to be seen. Whatever its future potential is technologically, plenty are speculating it will make a great impact on robotics and control automation.

If you are a small business owner manufacturing goods with automated equipment, hiring automation and control engineers can be expensive. While it’s unlikely the RT2 model or future derivatives will replace engineers, it’s more likely it will increase their productivity. From a business owner's perspective, these technologies could potentially empower employees to learn, grow, and deliver projects faster. And lest we forget “Time is Money.”

From control or automation engineers' perspective, the RT2 model potentially could help accelerate repetitive programming tasks while serving as a resource for new project designs. From a sustainability perspective, how much time is spent responding to repetitive pick-and-place failures? It's a common scenario and although good-intentioned, it often stems from a deficient technician, who “re-teaches” the automation what they “believe” is the root cause of a real-time production issue. This often cascades into other system errors, and may or may not solve the underlying issue. The RT2 could potentially work to upskill operators, or help reduce the amount of troubleshooting time required by control automation engineers.

Expensive But Probably Worth It

As with most emerging technologies, there are limitations, despite great engineering and good intentions. In RT2’s case, the new model cannot translate to new robotic motions. What it can do is apply the motion coordinates in the robotic training dataset in new ways to solve the visual and text-based challenge at hand. The data does have to exist in the robotic training data set though. Secondly, the computational cost of the hardware and software is high. In order to see mass adoption optimization of the model to run on lower-cost hardware is essential.

More than Meets the Eye

The RT2 model is a promising and exciting application of machine learning in the robotics space and there’s more to it than meets the eye. It’s great to see research being performed in this arena, and I’m excited to see what the future holds.