Using AWS Greengrass and SageMaker Tools to Detect Manufacturing Defects

This article discusses Amazon Web Services (AWS), Amazon SageMaker and AWS IoT Greengrass, and how their capabilities at the edge can impact machine learning use cases such as defect defection.

Traditional IoT devices typically transmit small payloads to their respective gateways/base stations at relatively infrequent intervals. These sensor-node systems rely on their cloud connectivity to perform all the relevant processing and analytics in order to monitor and control equipment.

However, many use cases are cropping up that require near-real-time feedback and communication. Edge computing eliminates the end-to-end latency and bandwidth constraints of sending/receiving data to and from the cloud, reduces data transmission and storage costs, and can help with data management compliance. Running machine learning (ML) algorithms and computational models locally effectively brings intelligence to these IoT devices and opens doors for a number of applications.

Defect detection is one such use case where smart capabilities greatly improve the operational efficiency, productivity, and yield of a manufacturing facility. Often called machine vision (MV), automated visual inspection relies on a mixture of complex systems including sensor systems and cameras. It can leverage trained ML models for image analysis to accomplish feature-based inspections for fault detection and classification.

This article discusses Amazon Web Services (AWS), Amazon SageMaker and AWS IoT Greengrass, and how their capabilities at the edge can impact use cases such as defect defection. It concludes with a discussion of how the specific features of SageMaker and Greengrass can be leveraged to successfully implement a ML algorithm of choice for defect detection.

Figure 1. Automated inspection vision system. Image courtesy of Adobe Stock

The Defect Detection Problem

Defect detection is usually accomplished by optical inspection where an intelligent camera, equipped with the proper sensors and embedded processor, detects flaws such as scratches, cracks, surface roughness, and bubbles (often in a binary pass/fail inspection). Manufacturing processes can pass a high volume of products through a camera’s field of view (FoV) on the order of milliseconds to seconds. The camera’s resolution, exposure time, lens, and the various light sources will all contribute to the quality of the data being fed into the processor and ML algorithm.

How ML Algorithms Meet the Evolving Needs of Defect Detection

Machine learning algorithms have radically transformed the field of defect detection; these models outperform traditional computer vision algorithms in accuracy and processing time. The main types of ML-based vision applications are object detection, classification, and segmentation.

This can involve the use of localization or semantic segmentation. In localization, information regarding the spatial location of the classified object is ascertained. Semantic segmentation provides labels for each pixel or group of pixels in an image and is classified into an instance that corresponds to an object or in the defect detection use case the pixels or part of the image that correspond to the defect are identified. In other words, the level of inference can be as coarse or fine-grained as the application requires.

Deep learning detection neural networks have been in development since the early 2010s for classification, detection, and real-time detection (e.g., AlexNet, SqueezeNet, DefectSegNet, RCNN, etc). However, models such as these need to run at real-time inference speeds to ensure the factory can properly monitor and track products. Moreover, factories can have a low network bandwidth and intermittent connectivity. This is where an intelligent edge based defect detection system is critical. The hybrid cloud and edge approach with AWS IoT Greengrass and Amazon SageMaker address the reliability needs of industrial defect detection.

How Amazon SageMaker and AWS Greengrass Can Be Used in Tandem for Edge Devices

What is Amazon SageMaker?

Amazon SageMaker is a platform for ML practitioners to build, train, optimize and deploy a ML algorithm. It is a cloud-based tool that brings together a broad set of purpose-built capabilities that allow engineers to build their model and enables hardware acceleration to optimize the model to run on any applicable IoT device.

A little about ML training

Training is a power intensive process where the ML algorithm is fed data to generate patterns and relationships that ultimately allow the model to make decisions and evaluate their confidence; this can require substantial computing resources that the cloud infrastructure can easily support. ML inference uses the trained ML algorithm to make predictions. Inference requires less computing power than training and has low latency -- two major constraints in edge use cases. These models must be optimized for specific edge device hardware where storage- and power-constrained IoT devices could only really leverage specific ML libraries in order to maximize the end-node’s utility.

What is AWS IoT Greengrass?

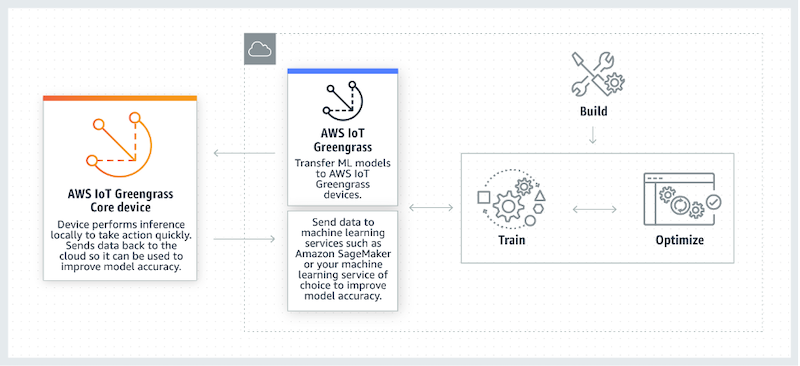

AWS IoT Greengrass is an open-source edge runtime and cloud service for building, deploying, and managing device software. Device software managed by AWS Greengrass, such as Amazon SageMaker Edge Manager, collects the data gathered during inference to efficiently get this feedback back to the cloud (Figure 2). Data can then be sent back to SageMaker to be tagged and used to continuously improve the quality of the ML model.

Figure 2. Using AWS IoT Greengrass for inference at the edge. Image courtesy of AWS

Using AWS Greengrass and Amazon SageMaker for defect detection

Creating a dataset with a camera setup

Users can prepare their dataset by capturing images of relevant parts on products that would pass through the conveyor belt with cameras. In order for this to occur, the camera installations must be able to capture images of both defective and non-defective products with good, consistent lighting. The images are annotated with image labeling tools to include both image categories and data that indicates the location of the defect (semantic segmentation).

Using models in SageMaker

SageMaker has a number of built-in algorithms. In this example, an image classification algorithm is used that leverages the ResNet convolution neural network (CNN) that can be trained using a number of images and hyperparameters -- tunable values that are used to control the learning process itself. For semantic segmentation, the defect detection masks are provided in the SageMaker SDK. The defect masks are based on the U-Net CNN within the TensorFlow framework. Segmentation masks are object-based annotations that label the pixels in an image with a particular “class”.

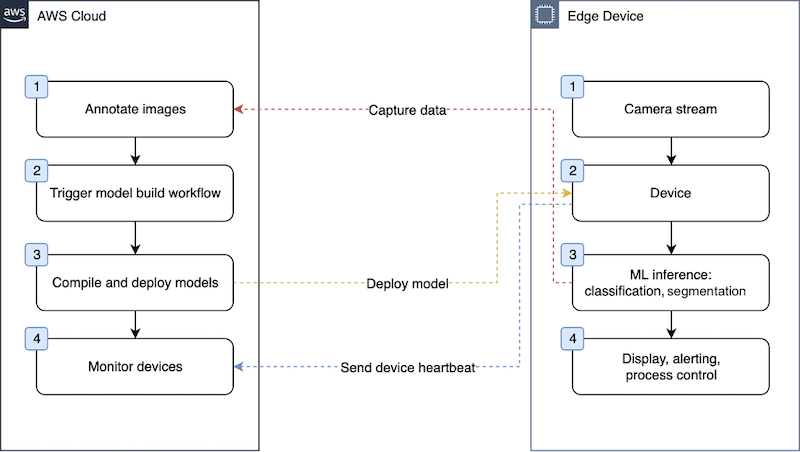

The defect detection cloud-to-edge lifecycle

As shown in Figure 3 below, an automated model building workflow is triggered that includes a sequence of steps including data augmentation, model training, and postprocessing. These deployments are all automated with continuous integration and continuous delivery (CI/CD) to induce model retraining on the cloud as well as over-the-air (OTA) updates to the edge. This way, both the cloud-based models and edge-based inferences stay up-to-date and accurate.

Figure 3. The process flow for defect detection using the AWS Cloud platform. Image courtesy of AWS

Packaging the model for your edge devices

In order to ensure the edge device is configured with the built and trained ML algorithm, the model is compiled with SageMaker Neo and then packaged with SageMaker Edge, and Greengrass over-the-air deployment is created to instruct the edge application to download the model package. These steps will need to be repeated for every new version of the trained models ― a process that can be easily automated.

In other words, the SageMaker Neo and SageMaker Edge tools within SageMaker can be used to manage the lifecycle of the models in order to configure the edge device for the built and trained ML algorithm. This way, developers can optimize an ML model specifically for their target platform: for example, an embedded MPU such as the NXP i.MX 8M Plus applications processor. An ML model built with one of the various supported ML frameworks serves as input for SageMaker.

Using AWS Greengrass with SageMaker to continuously improve the quality of the ML model

SageMaker also integrates with AWS IoT Greengrass to simplify accessing, maintaining and deploying the SageMaker Edge Agent and model to the fleet of edge devices. Data gathered from the inference running on AWS IoT Greengrass can be sent back to SageMaker to then be tagged and used to continuously improve the quality of machine learning models.

Observing the optimal hardware for your application

Inference can be performed on a range of hardware/chip architectures to be tested for varying degrees of latency. This way, businesses can undergo a more informed cost-benefit analysis for employing various CPUs and GPUs in their fleet with models and model architectures built through SageMaker. The i.MX 8M Plus provides further capabilities as the dedicated ML Acceleration NPU core is also supported for deploying ML models via Amazon SageMaker.

Cloud-Based Tools for Edge ML at Scale

Delivering an energy-efficient edge ML is a major challenge even with the developing hardware ecosystem around applications processors like the NXP i.MX 8M and i.MX 9 family of devices that integrate hardware neural processing units with dedicated multi-sensory data processing engines (graphics, image, display, audio, and voice) to run low-cost, energy-efficient edge ML algorithms. Models must be optimized for specific edge device hardware. Tools such as AWS IoT Greengrass and SageMaker allow data engineers to readily build, train, optimize, deploy, and closely monitor ML models across large numbers of devices with a fully managed infrastructure of tools and workflows to build an ML algorithm.