How Object Detection Works in Machine Vision Applications

The following will introduce how machine vision and object recognition works and how developers are working on making it easier to integrate for more extensive market adoption.

One of the main challenges with machine vision is that it is still a relatively new concept. While models are currently being developed, adopting machine vision technologies will often require some type of coding or programming knowledge. Many industries have specific applications that make object recognition and other features challenging to fit into a pre-programmed library of parts.

The First Steps of Machine Vision

Many articles bring up the comparison of apples and oranges in machine vision. Detecting an apple could be as easy as having the camera detect a circle, or perhaps an apple-shaped circle. But that might make it confuse an orange for an apple. If you add more data, such as color to the software, you could then identify the difference in apples and oranges by color and shape. More data or identifying variables increases the system's confidence in accurately identifying an object.

An engineer looking at several different screens in regards to object recognition and machine vision.

These variables can have many names, features, identifiers, classifiers, etc. In general, they are colors, geometries, angles, magnitudes between boundary lines, and more that help a computer identify an object based on an image or video provided by a camera. Imagine it like the opposite of creating a CAD drawing.

When developing a part in CAD, engineers create a filename. Then using processes of extrudes, cuts, and other CAD features, the part is built digitally. For a machine vision, the software is given an image of a part or component and tries to work backward. A program might notice where there is and isn't material, holes, diameters, and other features to identify the filename or part using classifiers.

Learning with Machine Vision

These variables are set up in a coding language. Models are often built from detecting features such as circles, squares, colors, but may need to "learn" or be trained to identify objects, especially if objects are custom or non-standard parts.

This ability to learn is why some people relate object detection with AI. Manufacturing vision software is currently largely supervised. Supervised or weak AI means the software is only outputting or identifying objects that are already programmed into the system.

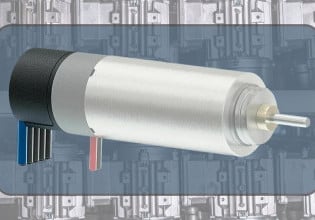

Vision system cameras that often use object detection. Image courtesy of Cognex.

Models can use images that help object recognition in two ways. First, adding images of what you want to identify, and second, images that are not the object you'd like to identify. For example, if you want a vision system to identify an apple, you might add images of different types of apples. The more images of varying types of apples you'd like to identify, the more accurate or confident the system will become. However, you could also add images of things that are not apples, such as oranges, and indicate that it is not an apple

Automatic learning or robust AI vision systems would have the ability to learn from the initial set of images and classifiers. Based on the algorithm, it may be possible for a system to classify a red ball as an apple or become more specific to what type of apple is identified as an "apple." While manually adding new images or classifiers to a program might be time-consuming, it ensures the model learns appropriately.

If an image doesn't meet specific parameters or confidence levels, the software could default to reject the part or send a notification to a human. This type of system could let a technician identify a questionable object. Once you can properly identify the part, it should be possible to add the image to the database as the correct or incorrect identification. Adding new images is like training the software.

The idea is that as more images or classifiers are added the system will become better at identifying objects. This will lead to fewer rejects, errors, or notifications due to the software not identifying the part.

What to Look For in Machine Vision Systems

Many machine vision classes can be found online, and some links will be provided at the end of this article. However, if you are not familiar with coding and looking for a solution today, make sure you find a vendor with experience, user-friendly software, and excellent customer service. While some machine vision companies may offer pre-programmed software or platforms, having someone work directly with you on your application is essential.

For example, while setting up a machine vision for a GD&T application, nothing seemed to work. After failing many times, even with responsive technical support over the phone, the company came into the shop.

In this real-life example, a $700 camera would have delayed the delivery of a few thousand dollar machines. Look for excellent customer service and if the vendor offers any service packages for maintenance, commissioning, or other aftermarket services.

Make sure a vendor walks you through selecting a base workflow model or setting up your own for a specific application. You will want to add or delete images, videos, or classifiers that you want your model to learn or unlearn. The ability to alter a model is important if there are any changes to production or accuracy overtime.

It is important to know what, why, and how you need to classify objects. In a production line that only makes screws, machine vision might be more critical to verify GD&T requirements. But in applications that handle many objects, such as packaging lines or bin picking, object recognition is important. There is a myriad of reasons a scanner or camera might not read a label to identify an object.

If machine vision software can recognize objects accurately, it could aid in the trend of more flexible and dynamic production lines able to handle multiple products. Machine vision technology is growing. As more images, classifiers, and development are added to models, we can expect to "see" more for our production lines.