What is the Difference Between Accuracy, Precision, and Resolution?

The differences between accuracy, precision, and resolution are important but challenging to narrow down. This article introduces the characteristics and concepts of each concept.

When you look on the web, the difference between accuracy, precision, and resolution seems to be a common question. However, finding clear answers is challenging. Colloquially, accuracy and precision tend to be used almost interchangeably. While all these concepts are related, they are very different from one another.

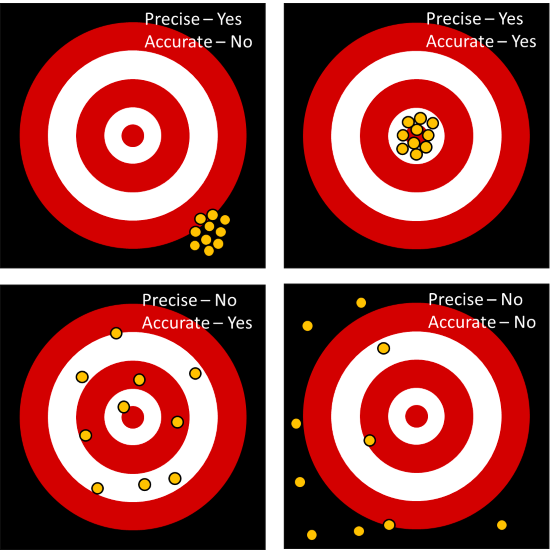

On the surface, one might find diagrams that show a dartboard, and others will show ideal vs. non-ideal analog to digital conversion steps. These are a good start but neither goes beyond really helping anyone know what is better or, more importantly, what is good enough. Having a grasp of these concepts allows the system designer or someone trying to troubleshoot a system to understand how to avoid problems and correct any encountered problems.

At first glance, one would think that this is an easy concept, but while preparing to write this, I reached out to a few people asking them what the differences were between these two concepts. Initially, people started out on the right track. However, when it came to narrowing down the differences, it was hard to do.

Accuracy vs. Precision Using Targets

Let us start out with the diagrams that everyone is shown to get an initial baseline on the differences between accuracy and precision, as well as relating it to resolution.

Here is a typical target example that is shown when trying to introduce this concept.

Figure 1. Basic diagrams for an initial baseline using targets.

Visually, in many ways, it is self-explanatory, though there is more than meets the eye here. The first question one should be asking themselves when seeing a chart like this is what are we trying to measure?

Changing the Resolution

For darts, you are looking to add up points where each dart is in the target, though with only a few rings, we do not have very continuous measurements. We only have five rings. But what does this have to do with accuracy or precision? Well, nothing really. The number of rings is the resolution of the measurement. If we increased the number of rings, we gain more resolution in the measurement.

Figure 2. Increasing the number of rings increases the resolution.

With the new target, we increased our resolution to measure seven rings, but the overall accuracy of the solution did not change. We are just able to measure it better.

In fairness, while the real accuracy has not changed, the overall measured accuracy may change. When we see something visually, we are quickly evaluating it in an analog mode, but the resolution is inherently a digital property (we are not talking about range in this discussion). If we were to reduce the number of bands to the extreme as shown below, we would have very little ability to measure accuracy or precision.

Figure 3. Reducing the number of rings greatly reduces the ability to measure accuracy or precision.

In the above two cases, we maintained the same data set but reduced the resolution to a point that it is just a binary value. Very little data (there is a digital pun here) can be derived with regards to accuracy and precision in the low-resolution target.

Next Steps: Variation, Error, and Analysis

Now that we visually laid the groundwork, we will begin to look at the math involved as well as a few sources of sensor variation and error that we need to take into account when analyzing our data in the next part of this series. Looking at these things helps us decide how to deal with some of the issues that present themselves with respect to accuracy and resolution, and how we can begin to work through them.