Combining Two Deep Learning Models

Combining two deep learning models, otherwise known as ensemble learning, has numerous techniques. Let’s review the current techniques and their advantages and disadvantages.

Deep learning is an invaluable tool in the arsenal of data analysts with new applications in different spheres, including industrial applications. The basic working principle of deep learning is using large volumes of data to build a model that can make accurate predictions.

Let us consider a small example of where industrial automation engineers could encounter a need to combine two deep learning models. A smartphone company employs a production line that manufactures multiple models of smartphones. Computerized vision employing deep learning algorithms performs the quality control of the production line.

Currently, the production line builds two smartphones: Phone A and Phone B. Models A and B perform the quality control for Phones A and B, respectively. The company introduces a new smartphone, Phone C. The production facility may need a new model to perform quality control for the third phone called Model C. Building a new model requires an enormous amount of data and time.

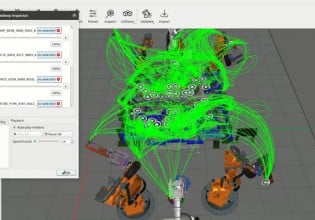

Figure 1. Video used courtesy of Matt Chan

Another alternative is to combine the learnings from Model A and B to build Model C. The combined model can perform quality control with minor adjustments to the weights.

Another scenario where models need to be combined is when a new model has to perform two tasks simultaneously. Two deep learning models could perform these tasks. A model that needs to classify a data set and make predictions in each category can be created by combining two models: one that can classify large datasets and one that can make predictions.

Ensemble Learning

Combining multiple deep learning models is ensemble learning. This is done to make better predictions, classification, or other functions of a deep learning model. Ensemble learning can also create a new model with the combined functionalities of different deep learning models.

Creating a new model has many benefits compared to training a new model completely from scratch.

- Requires very little data to train the combined model because most learning is derived from the combined models.

- It takes less time to build a combined model compared to building a new model.

- Requires less computing resources when models are combined.

- New combined models have higher accuracy and higher capabilities than those combined to obtain the new model.

Due to the different advantages of ensemble learning, it is often performed to create a new model. Respective deep learning algorithms, packages, and trained models must combine different models, and most advanced deep learning algorithms are written with Python.

Figure 2. A stacking ensemble for deep learning neural networks in Python. Image used courtesy of Machine Learning Mastery

Knowing Python and the respective deep learning tools used are prerequisites for combining different models. Once all those are in place, different techniques are implemented to combine different deep learning algorithms. They are explained in the following sections.

(Weighted) Average Method

In this method, the average of the two models is used as the new model. It is the most straightforward method of combining two deep learning models. The model created by taking the simple average of two models has more accuracy than the combined two models.

To further improve the accuracy and outcome of the combined model, the weighted average is a viable option. The weights given to the different models could be based on the performance of the models or on the amount of training each model underwent. In this method, two different models are combined to form a new model.

Bagging Method

The same deep learning model can have multiple iterations. The different iterations would be trained with different datasets and have different levels of improvement. Combining the different versions of the same deep learning model is the bagging method.

The methodology remains the same as that of the averaging method. Different versions of the same deep learning model are combined in a simple average or weighted average fashion. This method helps create a new model that does not have the confirmation bias built up with a single model, making the model more accurate and high-performing.

Boosting Method

The boosting method is similar to using a feedback loop for models. The performance of a model is used to adjust the subsequent models. This creates a positive feedback loop that accumulates all the factors that contribute to the model’s success.

Figure 3. Boosing method for ensemble learning. Image used courtesy of Ashish Patel

The boosting method reduces the bias and variance experienced by the models. This is possible as such negative sides are filtered out in subsequent iterations. Boosting can be done in two distinct manners: weight-based boosting and residual-based boosting.

Concatenation Method

This method is used when different data sources are to be merged into the same model. This combination technique takes in different inputs and concatenates them to the same model. The resultant dataset will have more dimensions than the original dataset.

When done multiple times sequentially, the dimensions of the data will grow to a very large number, which could lead to overfitting and loss of critical information, thus reducing the performance of the combined model.

Stacking Method

The stacking method for ensemble deep learning models integrates the different methods to develop deep learning models using the performance of previous iterations to boost the previous models. Adding an element of taking a weighted average to this stacked model improves the positive contributions for the sub-models.

Similarly, bagging techniques and concatenation techniques can be added to the models. The method of combining different techniques to combine models can improve the performance of the combined model.

The methodologies, techniques, and algorithms that can be used to combine deep learning models are innumerable and always evolving. There will be new techniques to accomplish the same task providing better results. The key ideas to know about combining models are given below.

- Combining deep learning models is also called ensemble learning.

- Combining different models is done to improve the performance of deep learning models.

- Building a new model by combination requires less time, data, and computational resources.

- The most common method to combine models is by averaging multiple models, where taking a weighted average improves the accuracy.

- Bagging, boosting, and concatenation are other methods used to combine deep learning models.

- Stacked ensemble learning uses different combining techniques to build a model.

how combining two deep learning models

how combining two deep learning models in matlab